Optical Character Recognition (OCR) is a widely used technology for extracting text from the scanned or camera images containing text.

One of our clients gave as a challenging task to see if we can improve the Tesseract Output somehow. They have been using Tesseract, but not with a satisfying performance or output. The challenge was to see if we can somehow improve the performance. After the steps outlined below, we were able to improve the accuracy by 52%.

This technology is widely used for electronic conversion of scanned images of handwritten, typewritten or printed text into machine-encoded text. And there are many open source and commercial OCR softwares available. One of the best Open source software is Tesseract OCR is comparable to commercial OCR softwares. That is why Tesseract is the best option for OCR Tasks when relying on Open source.

For a neatly scanned document, the character recognition process would be easy as pie. But when the case is, a receipt which is captured using a camera device, there would be problems like overexposure, underexposure, lighting condition varied throughout the image and many other worse conditions. In such images even the commercial OCR products won’t do well. After considering the conditions and comparing different OCR softwares, we found that no OCR software was good enough to give good results due to under exposure or over exposure of the images and to make the condition worse the unequal distribution of lighting over the image. Everyone should have come to experience this situation when taking a picture using a mobile camera.

The solution that we came with is to process the image for various level of filtering of image and making the image OCR friendly. What we found is a script which makes use of imagemagick named Textcleaner You can experiment with different options in this Script to find the best output.

What we did :

- Compared the output of Tesseract output with another Commercial software.

- Used Textcleaner with different options to enhance the image for making it more OCR friendly. (./textcleaner -g -e none -f 10 -o 5 Sample.jpg out.jpg)

- Run OCR after processing with textcleaner, Compare output for different versions of textcleaner outputs.

- Made 10 different versions of receipt image using Textcleaner with different filter settings, extracted the text from the OCR output of all 10 variants, Compared the output and derived the desired output using an algorithm and REGEX.

- You can rely on different algorithm on extracting text like :

- One with most number of charecters

- One with most number of numbers

- One with most number of characters and numbers

- Or do REGEX on OCR outputs of all 10 versions of textcleaner output and select the best match.

Result :

We were able to get a better OCR output using the open source Tesseract . Even if we had to perform extra processing, the end result is comparable to Commercial softwares.

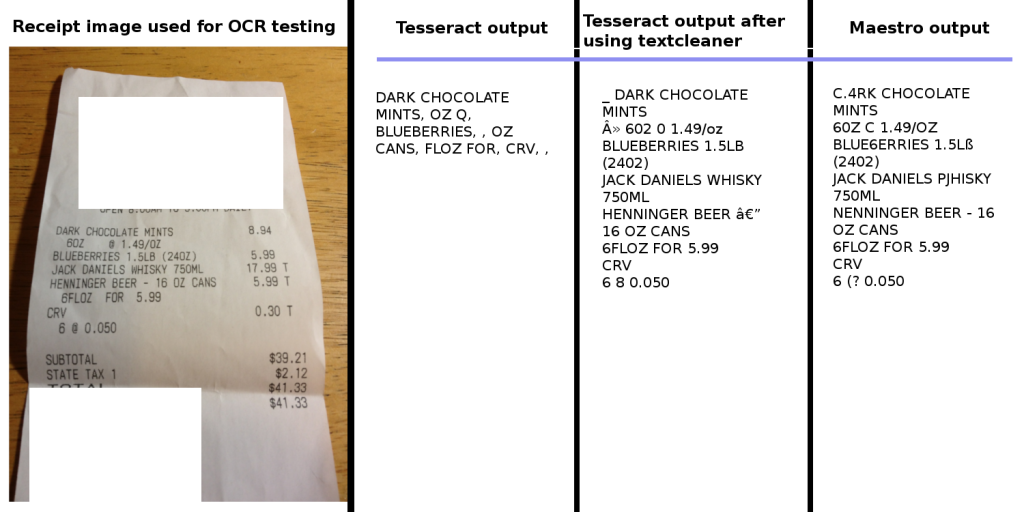

A sample of output obtained is compared in the table given below.